Some time late last Sunday night, I stumbled upon a fight discussion on Twitter. It turns out there are actually more threads to it than I’ve reproduced here, so if you’re really keen, do feel free to click through on individual tweets to see where it went. Here I’ve only reproduced the thread I read at the time. It starts with this:

Bacon in Four Easy Steps

My wife and I have been making our own bacon since January this year. The year is almost over so it’s well past time I documented the process for posterity, especially since I expect my typing to remain legible for longer than my handwriting. Also, I know there’s at least a couple of people out there who are actually interested in this 😉

Happiness is a Hong Kong SIM

In 1996 Regurgitator released a song called “Kong Foo Sing“. It starts with the line “Happiness is a Kong Foo Sing”, in reference to a particular brand of fortune cookie. But one night last week at the OpenStack Summit, I couldn’t help but think it would be better stated as “Happiness is a Hong Kong SIM”, because I’ve apparently become thoroughly addicted to my data connection.

I was there with five other SUSE engineers who work on SUSE Cloud (our OpenStack offering); Ralf Haferkamp, Michal Jura, Dirk Müller, Vincent Untz and Bernhard Wiedemann. We also had SUSE crew manning a booth which had one of those skill tester machines filled with plush Geekos. I didn’t manage to get one. Apparently my manual dexterity is less well developed than my hacking skills, because I did make ATC thanks to a handful of openSUSE-related commits to TripleO (apologies for the shameless self-aggrandizement, but this is my blog after all).

Given this was my first design summit, I thought it most sensible to first attend “Design Summit 101“, to get a handle on the format. The summit as a whole is split into general sessions and design summit sessions, the former for everyone, the latter intended for developers to map out what needs to happen for the next release. There’s also vendor booths in the main hall.

Roughly speaking, design sessions get a bunch of people together with a moderator/leader and an etherpad up on a projector, which anyone can edit. Then whatever the topic is, is hashed out over the next forty-odd minutes. It’s actually a really good format. The sessions I was in, anyone who wanted to speak or had something to offer, was heard. Everyone was courteous, and very welcoming of input, and of newcomers. Actually, as I remarked on the last day towards the end of Joshua McKenty’s “Culture, Code, Community and Conway” talk, everyone is terrifyingly happy. And this is not normal, but it’s a good thing.

As I’ve been doing high availability and storage for the past several years, and have also spent time on SUSE porting and scalability work on Crowbar, I split my time largely between HA, storage and deployment sessions.

On the deployment front, I went to:

- HA/Production Configuration, where the pieces of OpenStack that TripleO needs to deploy in a highly available manner were discussed (actually this could have been discussed for a solid week 😉

- Stable Branch Support and Update Futures, about updating images made for TripleO.

- An Evaluation of OpenStack Deployment Frameworks, where two guys from Symantec discussed the evaluation they’d done of Fuel, JuJu/MaaS, Crowbar, Foreman and Rackspace Private Cloud. In short, nothing was perfect, but Crowbar 1.6 performed the best (i.e. met their requirements better than any of the other solutions tested).

- Roundtable: Deploying and Upgrading OpenStack.

- OpenStack’s Bare Metal Provisioning Service, wherein I attained a better understanding of Ironic.

- It Not Just An Unicorn, Updating Our Public Cloud Platform from Folsom to Grizzly – how eNovance manage upgrades. Automate all the things and test, test, test. Binary updates are done by rsyncing prepared trees, but everything can be rolled back and forwards, because everything is in revision control. It sounds like they’ve done a very thorough job in their environment. I’m less sure this technique is applicable in a generic fashion.

- The Road to Live Upgrades. Notably they want to add a live upgrade test as a commit gate.

- Hardware Management Ramdisk. Lots of work to do here for Ironic to deploy ramdisks to do, e.g.: firmware updates, RAID configuration, etc.

- Firmware Updates (followed right on from the previous session).

- Making Ironic Resilient to Failures (what do you do if your TFTP/PXE server goes away?)

- Compass – Yet Another OpenStack Deployment System, from Huawei, to be released as open source under the Apache 2.0 license “soon” (end of November). A layer on top of Chef, but with other configuration tools as pluggable modules. If you squint at it just right, I’d argue it’s not dissimilar to Crowbar, at least from a high level.

On High Availability:

- Practical Lessons from Building a Highly Available OpenStack Private Cloud (Ceph for all storage, HA via four separate Pacemaker clusters. Notably the cluster running compute can scale out by just adding more nodes.

- High Availability Update: Havana and Icehouse, wherein I attempted to look scary sitting the front row wearing my STONITH Deathmatch t-shirt. I hope Florian and Syed will forgive my butchering their talk by summarizing it as: If you’re using MySQL, you want Galera. RabbitMQ still has consistency issues with mirrored queues and there can be only one Neutron L3 agent, so you need Pacemaker for those at least, so using Pacemaker to “HA all the things” is still an eminently reasonable approach (haproxy is great for load balancing, but no good if you have a service that’s fundamentally active/passive). Use Ceph for all your storage.

- Database Clusters as a Service in OpenStack: Integrated, Scalable, Highly Available and Secure. Focused on MySQL/MariaDB/Percona, Galera and variants thereof, which combinations are supported by Rackspace, HP Cloud and Amazon, and various deployment considerations (including replication across data centers).

On Storage:

- Encrypted Block Storage: Technical Walkthrough. This looks pretty neat. Crypto is done on the compute host via dm-crypt, so everything is encrypted in the volume store and even over the wire going to and from the compute host. Still needs work (naturally), notably it currently uses a single static key. Later, it will use Barbican.

- Swift Drive Workloads and Kinetic Open Storage. Sadly I had to skip out of this one early, but Seagate now have an interesting product which is a disk (and some enclosures) which present disks as key/value stores over ethernet, rather than as block devices. The idea here is you remove a whole lot of layers of the storage stack to try to get better performance.

- Real World Usage of GlusterFS + OpenStack. Interesting history of the project, what the pieces are, and how they now provide an “all-in-one” storage solution for OpenStack.

- Ceph: The De Facto Storage Backend for OpenStack. It was inevitable that this would go back-to-back with a GlusterFS presentation. All storage components (Glance, Cinder, object store) unified. Interestingly the

libvirt_image_type=rbdoption lets you directly boot all VMs from Ceph (at least if you’re using KVM). Is it the perfect stack? “Almost” (glance images are still copied around more than they should be, but there’s a patch for this floating around somewhere, also some snapshot integration work is still necessary). - Sheepdog: Yet Another All-In-One Storage for Openstack. So everyone is doing all-in-one storage for OpenStack now 😉 I haven’t spent any time with Sheepdog in the past, so this was interesting. It apparently tries to have minimal assumptions about the underlying kernel and filesystem, yet supports thousands of nodes, is purportedly fast and small (<50MB memory footprint) and consists of only 35K lines of C code.

- Ceph OpenStack Integration Unconference (gathering ideas to improve Ceph integration in OpenStack).

Around all this of course were many interesting discussions, meals and drinks with all sorts of people; my immediate colleagues, my some-time partners in crime, various long-time conference buddies and an assortment of delightful (and occasionally crazy) new acquaintances. If you’ve made it this far and haven’t been to an OpenStack summit yet, try to get to Atlanta in six months or Paris in a year. I don’t know yet whether or not I’ll be there, but I can pretty much guarantee you’ll still have a good time.

Tied to the Rails

Having published a transcript of the talk I gave last year at OSDC 2012, I stupidly decided I’d better do the same thing with this year’s OSDC 2013 talk. It’s been edited slightly for clarity (for example, you’ll find very few “ums” in the transcript) but should otherwise be a reasonably faithful reproduction.

A Cosmic Dance in a Little Box

It’s Hack Week again. This time around I decided to look at running TripleO on openSUSE. If you’re not familiar with TripleO, it’s short for OpenStack on OpenStack, i.e. it’s a project to deploy OpenStack clouds on bare metal, using the components of OpenStack itself to do the work. I take some delight in bootstrapping of this nature – I think there’s a nice symmetry to it. Or, possibly, I’m just perverse.

Anyway, onwards. I had a chat to Robert Collins about TripleO while at PyCon AU 2013. He introduced me to diskimage-builder and suggested that making it capable of building openSUSE images would be a good first step. It turned out that making diskimage-builder actually run on openSUSE was probably a better first step, but I managed to get most of that out of the way in a random fit of hackery a couple of months ago. Further testing this week uncovered a few more minor kinks, two of which I’ve fixed here and here. It’s always the cross-distro work that seems to bring out the edge cases.

Then I figured there’s not much point making diskimage-builder create openSUSE images without knowing I can set up some sort of environment to validate them. So I’ve spent large parts of the last couple of days working my way through the TripleO Dev/Test instructions, deploying the default Ubuntu images with my openSUSE 12.3 desktop as VM host. For those following along at home the install-dependencies script doesn’t work on openSUSE (some manual intervention required, which I’ll try to either fix, document, or both, later). Anyway, at some point last night, I had what appeared to be a working seed VM, and a broken undercloud VM which was choking during cloud-init:

Calling http://169.254.169.254/2009-04-04/meta-data/instance-id' failed Request timed out

Figuring that out, well… There I was with a seed VM deployed from an image built with some scripts from several git repositories, automatically configured to run even more pieces of OpenStack than I’ve spoken about before, which in turn had attempted to deploy a second VM, which wanted to connect back to the first over a virtual bridge and via the magic of some iptables rules and I was running tcpdump and tailing logs and all the moving parts were just suddenly this GIANT COSMIC DANCE in a tiny little box on my desk on a hill on an island at the bottom of the world.

It was at this point I realised I had probably been sitting at my computer for too long.

It turns out the problem above was due to my_ip being set to an empty string in /etc/nova/nova.conf on the seed VM. Somehow I didn’t have the fix in my local source repo. An additional problem is that libvirt on openSUSE, like Fedora, doesn’t set uri_default="qemu:///system". This causes nova baremetal calls from the seed VM to the host to fail as mentioned in bug #1226310. This bug is apparently fixed, but apparently the fix doesn’t work for me (another thing to investigate), so I went with the workaround of putting uri_default="qemu:///system" in ~/.config/libvirt/libvirt.conf.

So now (after a rather spectacular amount of disk and CPU thrashing) there are three OpenStack clouds running on my desktop PC. No smoke has come out.

- The seed VM has successfully spun up the “baremetal_0” undercloud VM and deployed OpenStack to it.

- The undercloud VM has successfully spun up the “baremetal_1” and “baremetal_2” VMs and deployed them as the overcloud control and compute nodes.

- I have apparently booted a demo VM in the overcloud, i.e. I’ve got a VM running inside a VM, although I haven’t quite managed to ssh into the latter yet (I suspect I’m missing a route or a firewall rule somewhere).

I think I had it right last night. There is a giant cosmic dance being performed in a tiny little box on my desk on a hill on an island at the bottom of the world.

Or, I’ve been sitting at my computer for too long again.

Coda

Keep your eyes on the road, your hands upon the wheel

— Roadhouse, The Doors

It’s been a bit over two and a half months since I rolled my car on an icy corner on the way to the last day of PyCon Au 2013. My body works properly again modulo some occasional faint stiffness in my right side and I’ve been discharged by my physiotherapist, so I thought publishing some retrospective thoughts might be appropriate.

I Want My… I Want My… NBN

I thought I’d start this post with some classic Dire Straits, largely for the extreme tech culture shock value, but also because “MTV” has the same number of syllables as “NBN”. I’ll wait while you watch it.

Now that that’s out of the way, I thought it might be interesting to share my recent NBN experience. Almost a year ago I said “Yay NBN! Bring it on! Especially if I don’t get stuck on satellite”. Thankfully it turns out I didn’t get stuck on satellite, instead I got NBN fixed wireless. For the uninitiated, this involves an antenna on your roof, pointed at a nearby tower:

An NTD (Network Terminating Device) is affixed to a wall inside your house:

The box on the left is the NTD, the box on the right is the line from the antenna on the roof. Not pictured are the four ports on the bottom of the NTD, one of which is now plugged into a BoB Lite from iiNet. According to the labels on the NTD, the unit remains the property of NBN Co, and you’re not allowed to tamper with it:

Getting online is easy. Whatever you plug into the NTD just gets an IP address via DHCP – there’s none of that screwing around with PPPoE usernames and passwords. The BoB Lite lets you configure one of its ethernet ports as a “WAN source” instead of using ADSL, which is what I’m doing. Alternately you could plug in a random Linux box and use that as a router, or even just plug your laptop straight in, which is what I did later when trying to diagnose a fault.

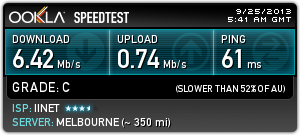

The wireless itself is LTE/4G, but unlike 4G on your mobile phone (which gets swamped to a greater or lesser degree when lots of people are in the same place), each NBN fixed wireless tower apparently serves a set number of premises (see the fact sheet PDF), so speed should remain relatively consistent. Here’s the obligatory speed tests, first from my ADSL connection:

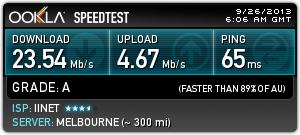

And here’s what I get via NBN wireless:

Boo-yah!

Speaking as someone who works from home and who has to regularly download and upload potentially large amounts of data, this is a huge benefit. Subjectively, random web browsing doesn’t seem wildly different than before, but suddenly being able to download openSUSE ISOs, update test VMs, and pull build dependencies at ~2 megabytes per second has markedly decreased the amount of time I spend sitting around waiting. And let’s not leave uploads out of the picture here – I push code up to github, I publish my blog, I upload tarballs, I contribute to wikis. I’ve seen too much discussion of FTTP vs. FTTN focus on download speed only, which seems to assume that we’re all passive consumers of MTV videos. OK, fine, I’m on wireless and FTTP is never going to be an option where I live, but I don’t want anyone to lose sight of the fact that being able to produce and upload content is a vital part of participating in our shiny new digital future. A reliable connection with decent download and upload speed is vital.

Now that I’ve covered the happy part of my NBN experience, I’ll also share the kinks and glitches for completeness. I rang up to get connected on August 1st. At the time, the next available installation appointment was August 21st. On that day I got a call saying the technician wouldn’t be able to make it because his laptop was broken. I offered to let them use my laptop instead, but apparently this isn’t possible, so the installation was rescheduled for September 6th. All attempts at escalating this (i.e. getting the subsequent 2.5 week delay reduced, because after all it was their fault they had a broken laptop) failed. By the time the right person at iiNet was able to rattle enough sabres in the direction of NBN Co, the new installation date was close enough that it didn’t make a difference anymore. To be clear, as far as I can tell, iiNet is not at fault; the problem seems to be one of bureaucracy combined with probable understaffing/overdemand of NBN Co (apparently this newfangled interwebs thingy is popular). As an aside, I mentioned the “broken laptop” problem to a friend, who said a friend of his had also had installation rescheduled with the same excuse. I’m not quite sure what to make of that, but I will state for the record that I seriously doubt our new fearless leaders would have been able to make matters any better had they been in power at the time.

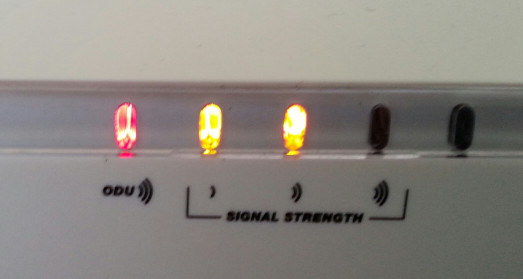

Anyway, installation finally went ahead and all was sweetnees and light for just under three weeks, until one of those wacky spring days where it’s sun, then howling wind, then sideways rain, then sun again, then two orange signal strength lights and a red ODU light:

ODU stands for Outdoor Unit, which is apparently the antenna. For at least an hour, the lights cycled between red and green ODU, and two orange / three green signal strength. Half an hour on the phone to iiNet support, as well as plugging a laptop directly into the NTD didn’t get me anywhere. I managed to get a DHCP lease on my laptop very briefly at one point, but otherwise the connection was completely hosed. The exceptionally courteous and helpful woman in iiNet’s Fibre team logged a fault with NBN Co, and I switched my ADSL – which I had kept for just such an emergency – back on, so that I could get some work done.

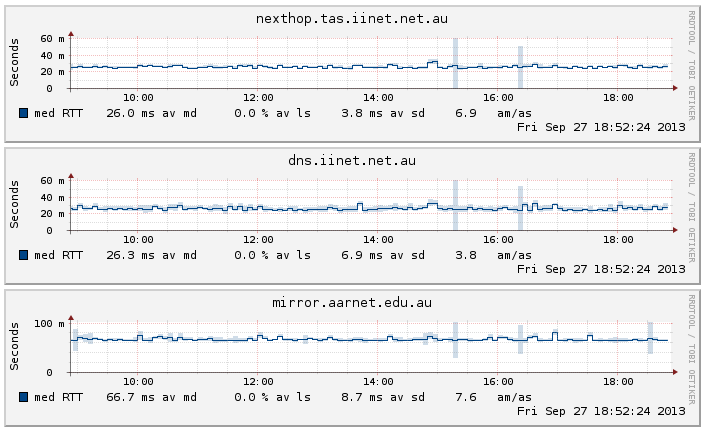

The next day everything was green, so I plugged back into the NTD. About an hour after this, I got a call from the same woman at iiNet saying they’d noticed I had a connection again and had thus cancelled the fault, but that she was going to keep an eye on things for a little while, and would check back with me over the next week or two. I also received an SMS with her direct email address, so I can advise her of any further trouble. I’ve since got smokeping running here against what I hope is a useful set of remote hosts so I’ll notice if anything goes freaky while I’m asleep or otherwise away from my desk:

I really wish I knew how to get a console on the NTD, or view some logs. I was told it’s basically a dumb box, but surely it knows a bit more internally than it indicates on the blinkenlights. As I said on twitter the other day, I feel like a mechanic trapped in the drivers seat of a car, with access only to the dashboard lights. Oh, well, fingers crossed…

Rebooted Pork: A Recipe

Recording this for posterity.

Ingredients:

- Leftover roast pork (optimally home grown free range and happy, about which another post when I find the time), diced or thinly sliced.

- A few onions, a couple of potatoes, some mushrooms, all thinly sliced.

- Capsicum (red or green or both), diced.

- Crushed garlic.

- BBQ sauce (plus possibly tomato paste – see how you go).

- Butter.

- Salt.

- Pepper (bonus points for Tasmanian bush pepper).

- Oregano.

- Cheese, chilli sauce, tortillas (optional).

Procedure:

- Heat frypan, add pork.

- Add onions and garlic, fry for a while.

- Add butter, potatoes, mushrooms, BBQ sauce, salt, pepper, oregano, fry some more.

- Cover, lower heat, add water and/or tomato paste if it seems necessary.

- Go away for at least half an hour. Check your email. Read Twitter. Do some actual useful work. But make sure you’re within smelling distance of the kitchen, just in case.

- Add capsicum.

- Wait a bit more, depending on how well done you like your capsicum.

- Serve, either in a bowl or wrapped in a tortilla, with or without cheese and chilli sauce according to taste.

WTF is OpenStack

The video for my WTF is OpenStack talk (along with all the other PyCon AU 2013 videos) made it onto YouTube some time in the last few days. Here it is:

Credit where credit is due; this presentation was based on “Cloud Computing with OpenStack: An Introduction” by Martin Loschwitz from Hastexo. Thanks Martin!

Achievement Unlocked: A Cautionary Tale

This is the story of how I rolled my car and somehow didn’t die on the way to the last day of PyCon Au 2013. I’m writing this partly for catharsis (I think it helps emotionally to talk about events like this) and partly in the hope that my experience will remind others to be safe on the roads. If you find descriptions of physical and mental trauma distressing, you should probably stop reading now, but rest assured that I am basically OK.

Continue reading